Will Cybersecurity Be Replaced by AI? Future Outlook for 2025

This article breaks down what AI is actually doing in cybersecurity right now, where its limitations lie, and why human experts remain critical in AI-augmented security environments. We’ll also cover which job roles are safest from automation, what certifications are still valuable, and how to future-proof your cybersecurity career against AI disruption. If you're asking whether cybersecurity is still a worthwhile career choice in 2025—the answer lies in how well you understand the shift, not just the tools.

What AI Is Doing in Cybersecurity Right Now

Artificial Intelligence is no longer experimental in cybersecurity—it’s operational. By 2025, nearly every major cybersecurity platform includes some form of AI or machine learning to detect anomalies, block threats, or analyze traffic patterns. But AI is a tool, not a replacement. It’s excellent at pattern recognition, behavioral analysis, and real-time response automation, but it still requires humans to set thresholds, interpret outputs, and make final calls in complex scenarios.

Threat Detection & Automation

Modern security platforms rely on AI to identify and neutralize threats faster than human analysts ever could. Common applications include:

Log analysis across SIEM platforms to surface malicious behavior

Anomaly detection across traffic, user behavior, and login patterns

Auto-isolation of compromised devices on enterprise networks

AI handles these tasks in milliseconds, where a human might take hours. For example, platforms like CrowdStrike and SentinelOne use machine learning algorithms to detect zero-day threats, correlating behavior across thousands of endpoints. This allows organizations to stay ahead of attackers rather than reacting late.

These systems also improve continuously—by learning from each detected incident, AI models evolve and adapt to new threat variants without manual intervention. But the rules that define what’s a threat still come from human-configured baselines, and alerts must be interpreted to avoid false positives or context-blind actions.

AI in Phishing, Intrusion & Malware Defense

Phishing remains the most common attack vector, and AI is now a frontline defense in filtering it out. Tools like Microsoft Defender use AI to:

Analyze email headers, tone, and attachment behavior

Compare messages to known phishing templates or impersonation patterns

Flag or block suspected content before it hits user inboxes

In intrusion detection, AI can scan enormous traffic volumes to identify patterns in:

Port scanning, lateral movement, and brute force login attempts

Suspicious file executions or payload obfuscation techniques

Sudden access anomalies in time, geography, or device behavior

Malware defense benefits too—AI-powered antivirus engines can now recognize fileless malware, polymorphic viruses, and command-and-control behavior that signature-based tools miss. EDR (Endpoint Detection and Response) systems powered by AI map attacker behavior, not just code samples.

Yet these tools don’t run unsupervised. They rely on threat intelligence analysts, SOC engineers, and incident response teams to validate outputs, escalate real threats, and tune false positives.

Key AI-Powered Cybersecurity Capabilities in 2025

Predictive risk scoring: Assessing which systems are most vulnerable

Behavioral biometrics: Tracking typing speed, cursor movement, login rhythm

User and entity behavior analytics (UEBA): Identifying unusual internal actions

Automated incident response: Triggering workflows to contain or mitigate breaches

Despite all of this, AI is still narrow. It can process signals, but not signals in social, cultural, or legal context—which is where human expertise remains irreplaceable.

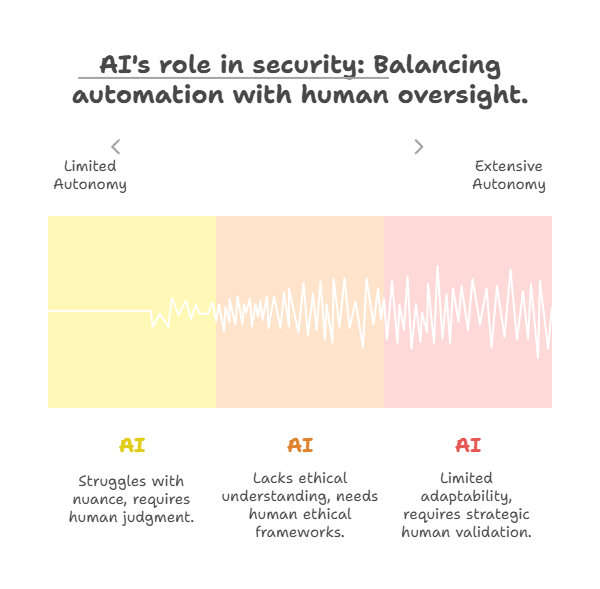

Limitations of AI in Security

While AI has become indispensable for scaling cybersecurity operations, its limitations are critical to understand in 2025. The more organizations depend on automation, the greater the need to recognize where machines fall short—especially in context interpretation, ethical nuance, and real-world adaptability. AI is not sentient; it doesn’t understand intent. It identifies patterns, flags anomalies, and predicts outcomes—but only within the parameters it’s been trained on.

Human Judgment and Context Gaps

AI struggles in environments where nuance, context, or ambiguity matter. A malicious login attempt during off-hours may look identical to a legitimate employee logging in from a different time zone while traveling. AI may:

Flag the activity as anomalous

Auto-lock the account

Trigger a false incident response

But a human analyst would cross-check travel logs, email activity, or context provided by the employee—and likely determine the event was non-malicious. This is where judgment, intent, and experience outperform algorithms.

In insider threat detection, AI often lacks the emotional or behavioral depth to detect disgruntled employees, low-risk negligence, or social engineering attempts that bypass technical safeguards. It can’t assess tone in Slack messages, nor can it detect micro-patterns of sabotage over time.

AI also can’t handle decisions involving legal implications, ethical tradeoffs, or multi-stakeholder responses. For instance, during a data breach, choosing whether to notify users, involve law enforcement, or preserve internal evidence requires legal insight, diplomacy, and strategic tradeoffs—none of which AI can simulate.

AI Bias, Breach Risk, and Black Box Systems

AI systems are only as strong as their training data—and this introduces bias. A model trained on North American attack patterns may underperform in detecting threats emerging from Asia, Africa, or Latin America. Similarly, a phishing model trained on English emails may miss sophisticated attacks in multilingual environments.

Bias doesn’t just cause false positives—it creates blind spots, making organizations vulnerable to attack vectors they didn’t even think to model.

Another overlooked risk: AI can itself be attacked. Adversarial inputs—crafted to trick algorithms into making the wrong decisions—can cause AI systems to ignore malware, trust malicious users, or shut down processes entirely. As cybercriminals get smarter, they’ll increasingly target AI pipelines instead of just infrastructure.

Finally, the “black box” problem remains unsolved. Many AI-powered platforms can’t fully explain why a decision was made. This creates accountability issues when:

A legitimate user is locked out of their account

A threat is missed and results in breach

An alert is dismissed without justification

Security teams must be able to audit, validate, and adjust AI actions in real time. Without human oversight, even the most sophisticated platform becomes a risk in itself.

AI isn’t replacing cybersecurity. It’s evolving it. But for AI to deliver real protection, it must operate under human control, ethical frameworks, and strategic validation.

Hybrid Models: AI and Human Collaboration

The most effective cybersecurity strategy in 2025 is not AI versus humans—it’s AI with humans. Organizations that succeed in defending against modern threats use hybrid models, where AI handles scale and speed, while humans provide judgment, escalation, and contextual oversight. This collaboration isn't optional—it’s the only way to close the widening gap between attack sophistication and defense capacity.

Augmented Response Systems

AI accelerates what analysts do, but it doesn't replace their decision-making. Modern SOCs (Security Operations Centers) use AI-augmented response systems that empower analysts with real-time insights, without fully automating response actions.

Key benefits of augmented systems:

AI scans millions of logs in seconds, surfaces correlated threats, and highlights anomalies

Analysts then triage alerts based on impact, legitimacy, and incident context

Systems like SOAR (Security Orchestration, Automation and Response) allow analysts to launch pre-configured responses, like isolating a device or blocking a domain

This balance reduces fatigue, eliminates manual grunt work, and lets professionals focus on high-priority threats, insider risks, or strategic remediation efforts. Analysts become incident conductors, not alert chasers.

This collaboration is especially critical in zero-day attacks, where no historical pattern exists. AI flags behavior, but humans must analyze root causes, model consequences, and coordinate multi-layer responses—sometimes across entire supply chains or cloud ecosystems.

Analyst Roles in AI-Driven Environments

AI has shifted the cybersecurity job landscape—but not by eliminating jobs. Instead, it’s evolving job functions from manual execution to high-level orchestration. Analysts in 2025 are more strategic, technical, and interdisciplinary.

Emerging roles include:

AI Security Analysts: Experts who tune, train, and validate AI models to reduce false positives and increase precision

Threat Intelligence Curators: Professionals who feed models with up-to-date, localized, and verified threat data

Response Engineers: Specialists who configure automated workflows, define response playbooks, and manage SOAR systems

Adversarial ML Defenders: Niche experts who prevent AI from being manipulated or exploited by hackers

These are not low-skill, replaceable jobs. They require:

Deep understanding of cybersecurity principles

Cross-domain thinking (law, psychology, geopolitics)

Hands-on familiarity with AI outputs, platform behavior, and automation triggers

Even Tier 1 analysts today are trained not just to escalate, but to interpret AI insights, query large datasets, and assess anomalies across cloud, on-prem, and hybrid networks.

Why Humans Are Still Mission-Critical

There’s a reason even the most advanced cyber defense systems still employ human review teams. AI is great at answering “what just happened?”, but not “what should we do next?” The latter requires:

Prioritization based on business risk, brand impact, and data classification

Negotiation with stakeholders (legal, PR, HR)

Policy alignment and compliance awareness (GDPR, HIPAA, SOC 2)

Moral and ethical oversight—especially when user monitoring is involved

AI simply cannot assess organizational risk appetite, make judgment calls involving PR implications, or decide whether an action aligns with regional regulations.

The future isn’t about whether AI will replace cybersecurity. It’s about how security professionals can wield AI as a force multiplier. And the ones who thrive will be those who:

Learn to leverage AI without blindly trusting it

Adapt to new tools, workflows, and threat surfaces

Maintain the critical thinking that AI still can’t replicate

| Aspect | Description | Key Insights |

|---|---|---|

| Hybrid Models (AI + Humans) | Combining AI’s speed and scale with human judgment, escalation, and oversight. | Closes gaps between attack sophistication and defense capacity. Essential in modern cyber defense. |

| Augmented Response Systems | AI scans data, highlights threats; humans triage and execute responses via systems like SOAR. | Reduces fatigue, focuses analysts on high-priority threats, enhances decision-making. |

| Emerging Analyst Roles | New roles like AI Security Analysts, Threat Intelligence Curators, Response Engineers, and Adversarial ML Defenders. | Requires deep cybersecurity understanding, cross-domain thinking, and technical proficiency with AI and platforms. |

| Why Humans Remain Critical | Humans provide prioritization, compliance awareness, stakeholder negotiation, and moral oversight. | AI lacks contextual understanding, ethical reasoning, and the ability to align actions with complex policies. |

| Future Outlook | Cybersecurity roles evolve into orchestration, decision-making, and AI collaboration rather than manual tasks. | Success depends on professionals who adapt, think critically, and use AI as a force multiplier. |

Should You Still Pursue Cybersecurity in 2025?

Absolutely—cybersecurity is not being replaced by AI, it's being reshaped by it. In fact, demand for qualified cybersecurity professionals in 2025 is higher than ever, especially those who understand how to operate within AI-augmented environments. If you're considering entering the field, now is the time to future-proof your role by acquiring skills that AI can't replicate—such as strategic reasoning, human-layer risk assessment, and cross-functional security leadership.

Demand Forecast

Despite growing automation, the cybersecurity talent gap continues to widen. According to (ISC)², there’s a projected global shortfall of over 3.5 million unfilled roles in 2025. Companies aren’t just seeking generic IT staff—they’re hiring for roles that blend technical proficiency with real-world threat intelligence, risk analysis, and incident coordination.

Key drivers behind this demand:

Cloud security threats are expanding as more enterprises shift infrastructure to AWS, Azure, and GCP

AI-generated phishing and deepfake-based social engineering tactics are skyrocketing

Privacy laws like GDPR and CCPA require compliance officers with cyber-legal literacy

Supply chain attacks and geopolitical cyber conflict are increasing complexity at the executive level

This means organizations need analysts, architects, engineers, and consultants who can think beyond systems—and connect security to people, policy, and platform risk.

If you're entering the field now, you're not late. You're perfectly timed to capitalize on the next evolution of the profession.

AI-Safe Roles and Certifications

The safest roles are the ones that complement AI, not compete with it. These include:

1. Security Analysts and SOC Engineers

AI can filter and escalate alerts—but human analysts triage, investigate, and escalate with full awareness of legal, operational, and brand impact.

2. Cybersecurity Architects

Designing scalable, secure environments across hybrid and cloud infrastructures is a strategic function that depends on human creativity, foresight, and cross-functional planning.

3. Governance, Risk & Compliance (GRC)

AI doesn't understand legal nuance, company culture, or reputation management. GRC professionals interpret laws, manage vendor risk, and align security policy to business reality.

4. Threat Intelligence & Red Team Experts

AI can't emulate the asymmetric thinking of real attackers. Red teamers, pentesters, and intel analysts outthink adversaries, build zero-trust models, and shape strategy—not just alerts.

Certifications that are AI-resistant and still in demand include:

CompTIA Security+ – Entry-level, foundational knowledge

CISSP (Certified Information Systems Security Professional) – Strategic leadership-level cert

CEH (Certified Ethical Hacker) – Popular for penetration testing and offensive security

CCSP (Certified Cloud Security Professional) – For cloud-native roles

[CERTIFICATION NAME] – Especially for human-centric specializations, combining applied skills with strategic context

Even as AI changes workflows, certifications provide proof of competence, critical thinking, and industry alignment that AI can’t replicate.

Cybersecurity isn't just surviving in the age of AI—it's redefining the edge of human-machine collaboration. The only thing that’s being automated away is manual repetition—not strategic value.

Future-Proof Your Career with ACSMI’s Advanced Cybersecurity & Management Certification

If you're serious about staying ahead in cybersecurity as AI reshapes the industry, ACSMI’s Advanced Cybersecurity & Management Certification is the most strategic move you can make. Built for professionals navigating AI-integrated threat environments, this program trains you to lead where automation ends—and critical human oversight begins.

Why This Certification Stands Out

This isn’t just another technical course. ACSMI’s Advanced Cybersecurity & Management Certification prepares you for roles that demand both strategic security acumen and AI literacy. It’s ideal for:

Security analysts in AI-augmented SOCs

GRC professionals managing compliance under automation

Threat response leads interfacing with SOAR and SIEM tools

Advisors who oversee ethical and operational AI security deployments

You’ll gain applied skills that AI can’t replace—judgment, escalation strategy, legal coordination, and interdepartmental security leadership.

Human-Layer Security Skills You’ll Build

This certification develops the competencies that define AI-resilient roles, including:

Triaging machine-generated alerts based on human risk context

Managing incident escalations with legal, reputational, and compliance impact

Leading cross-functional coordination between tech, legal, and operations

Preventing AI misuse, bias, or misconfiguration in enterprise systems

AI doesn't understand business logic. You do—and this certification teaches you how to act on it at scale.

Built for Real-World Implementation

ACSMI’s Advanced Cybersecurity & Management Certification is hands-on from day one. You’ll work through:

Simulated attacks against AI-powered defenses

Real-time SOAR and UEBA case studies

Strategic incident report writing and executive communication

Configuring AI systems with guardrails and ethical constraints

This isn’t just learning—it’s operational enablement that empowers you to perform in AI-driven cybersecurity roles starting immediately.

Why It Matters in 2025

The fastest-growing threat in cybersecurity isn’t just attackers—it’s overreliance on automation without oversight. Organizations are hiring professionals who can govern, audit, and escalate AI-driven decisions—not just follow playbooks.

ACSMI’s Advanced Cybersecurity & Management Certification positions you to lead that shift. It makes you the human in the loop that AI can’t replace—and that every company needs.

Final Thoughts

AI is transforming cybersecurity—but not by replacing it. It’s reshaping the battlefield, forcing organizations to rethink how speed, scale, and strategy are applied to digital defense. The truth in 2025 is this: AI can detect threats, but it can’t understand them in context, and it can’t decide how a breach should be handled when reputation, compliance, and risk tolerance are on the line.

This means the human layer is more valuable than ever. Companies aren’t eliminating cybersecurity jobs—they’re redefining them. And the professionals who stay ahead will be those who understand AI, but also know how to lead it.

ACSMI’s Advanced Cybersecurity & Management Certification is designed for exactly that. If you want to be part of cybersecurity’s next chapter—not replaced by it—this is how you lead.

The Hidden Risks of Over-Relying on AI in Cybersecurity

As AI becomes standard in modern security stacks, many organizations are rushing to deploy automated tools—without fully understanding their blind spots. While automation promises efficiency, over-reliance on AI can backfire fast, especially when critical thinking, ethical nuance, and human accountability are taken out of the equation. In 2025, these overlooked risks aren’t theoretical—they’re happening in real networks, in real-time.

1. AI Fatigue and Blind Trust

Just as alert fatigue overwhelmed SOC teams in the past, AI alert flooding is now a new reality. When systems are trained on overly broad or noisy datasets, they generate false positives that desensitize analysts. The result? Human responders start to trust the system blindly—or worse, ignore alerts altogether.

Some organizations are now tuning down detection thresholds just to reduce AI noise—opening the door to stealthier threats that no longer trigger meaningful alerts. Trusting AI without validation loops creates a single point of failure that’s harder to detect than traditional gaps.

2. Black Box Outputs Limit Accountability

Many AI-based systems used in cybersecurity—especially those using deep learning—produce “black box” decisions. They’ll flag an event as a risk but offer no transparent explanation for why.

This becomes dangerous when:

A legitimate user is deactivated without notice

Automated systems quarantine files needed for business continuity

Legal or regulatory bodies demand logs or justifications for a security action

You can’t defend a decision you don’t understand. That’s why human validation, logging clarity, and transparent escalation processes remain non-negotiable in AI-assisted environments.

3. AI Systems Are Attack Surfaces

Adversarial actors are now designing exploits that target the AI itself—not the firewall, not the endpoint, but the model logic. These include:

Data poisoning: Subtly feeding malicious data into training sets

Adversarial inputs: Crafting scripts or files designed to bypass detection

Model inversion attacks: Extracting sensitive data from the model’s learned behavior

This shift means AI is no longer just a tool—it’s a target. And if you don’t have cybersecurity pros who understand AI architecture, these attacks go unnoticed until damage is done.

4. Compliance Violations from Misconfigured Automation

One overlooked threat is regulatory noncompliance caused by automated security actions. For instance:

An AI system deletes user data in response to a malware trigger—violating GDPR retention laws

A model flags behavioral anomalies, triggering employee surveillance without proper disclosure

Automated log scans inadvertently retain sensitive health or financial data without encryption or anonymization

These are legal liabilities, not just technical hiccups. And AI, being logic-bound, doesn’t know where compliance lines are drawn. Human GRC experts are essential to ensuring AI doesn’t cross those boundaries.

5. Loss of Institutional Knowledge

Over-automation can lead to a generation of security analysts who never learn how to triage without AI assistance. As tools take over pattern matching, signature analysis, and baseline comparisons, junior teams risk losing the core competencies of threat reasoning and investigative analysis.

When the system fails or behaves unpredictably, these teams may be unprepared to intervene, escalate properly, or rebuild manual workflows under pressure. That's not just inefficient—it's dangerous.

In short, AI enhances cybersecurity—but only when it's governed, not blindly trusted. The future belongs to professionals who know how to question, interpret, and override AI—and to organizations that make AI accountable to human oversight, not the other way around.

How do you view AI’s role in cybersecurity?

Frequently Asked Questions

-

Yes—AI is now foundational to modern cybersecurity operations. It powers everything from anomaly detection and phishing filters to automated threat response systems. AI is especially effective in scanning massive datasets, identifying patterns in real-time, and flagging unusual behavior that might indicate an intrusion. Platforms like CrowdStrike, Microsoft Defender, and SentinelOne use AI to track endpoint activity, identify malware behavior, and isolate compromised assets within seconds. However, AI only enhances human efforts—it doesn’t replace them. Security professionals still need to analyze alerts, make judgment calls, and ensure actions align with legal, ethical, and organizational standards.

-

Absolutely. In fact, combining AI and cybersecurity is one of the most future-proof skill sets in tech. Many cybersecurity professionals now add AI knowledge to their skill stack, learning how models are trained, where they can fail, and how to validate AI outputs. Certifications like ACSMI’s Advanced Cybersecurity & Management Certification are built to help professionals navigate AI-integrated environments, making you capable of both defending systems and overseeing AI-generated decisions. With growing demand for AI-aware cybersecurity roles, this combination offers high job security and leadership potential across sectors.

-

AI is not on track to replace cybersecurity—it's on track to restructure it. Repetitive tasks like log filtering, basic phishing detection, and behavior flagging are being automated. But decision-making, context analysis, ethical oversight, and incident response leadership remain firmly human responsibilities. AI still lacks emotional intelligence, cross-disciplinary reasoning, and the ability to handle complex multi-stakeholder decisions during breaches. Instead of replacing cybersecurity roles, AI is reshaping them, creating demand for professionals who can interpret machine outputs, refine automation systems, and guide escalation processes.

-

AI identifies hacker behavior by recognizing patterns and anomalies in system activity. It can detect signs of brute force attacks, lateral movement within networks, or attempts to escalate privileges. AI-powered systems also block known malicious domains, isolate compromised devices, and apply behavioral heuristics to detect unknown threats (zero-day attacks). For instance, AI can flag a legitimate user account behaving abnormally, indicating potential credential theft. However, AI is reactive unless paired with up-to-date threat intelligence and strong governance, which only skilled professionals can provide. AI helps stop hackers—but humans finalize the defense strategy.

-

AI improves cybersecurity by increasing speed, scale, and accuracy in detecting and responding to threats. It enables 24/7 monitoring of systems, reduces false negatives in malware detection, and streamlines complex tasks like log analysis. AI also helps reduce analyst fatigue by handling repetitive functions, allowing human teams to focus on high-impact threats. In threat intelligence, AI quickly processes vast volumes of data to identify new tactics or emerging threat actors. That said, AI is only as effective as the people managing it—it must be trained, validated, and continuously tuned to stay relevant.