AI-Driven Cybersecurity Tools: Predicting the Top Innovations for 2026–2030

AI will not “replace” security teams. It will replace slow security teams. Between 2026 and 2030, the biggest shift is not a new product category. It is decision velocity: tools that collapse detection, context, and response into a single motion will win, and tools that only generate alerts will get ripped out.

This article predicts the AI-driven cybersecurity innovations that will matter, how they will actually be used in real SOC workflows, and what to demand before you trust them in production. Use it to prevent vendor regret, reduce analyst burnout, and build defenses that scale.

1. 2026–2030: the real reason AI security tooling is changing so fast

The security tool market is entering a brutal phase where “more detections” stops being valuable. Most teams are already drowning, and AI is about to multiply both the attack surface and the noise. The innovation that matters is anything that improves the signal-to-action ratio, especially across endpoints, identity, and cloud. This is exactly why the next wave of capability planning must tie to the skills and operating models described in future skills for cybersecurity professionals and the workforce reality in automation and the future cybersecurity workforce.

What makes 2026–2030 different is that AI will exist on both sides, and attackers will use it with fewer constraints. That means your tools must assume: (1) phishing is personalized, (2) identity is the primary intrusion path, (3) endpoints are the evidence layer, and (4) investigations must be faster than lateral movement. Those assumptions show up repeatedly across modern threat analysis like the phishing trends report, the state of ransomware 2025, and the broader 2025 data breach report.

Here is the uncomfortable pain point most teams do not admit. They do not have a tooling problem. They have a “proof” problem. They cannot reliably answer: what happened, what is impacted, what do we do next, and how do we stop the repeat. That is why the most important AI-driven innovations will align tightly with emerging evidence expectations in future cybersecurity audit practices and regulatory pressure in future cybersecurity compliance.

If you want a clean mental model for 2026–2030 buying decisions, use this filter: does the tool reduce uncertainty fast, and does it make containment consistent. If it cannot do both, it will become expensive noise, especially as standards evolve through the next generation described in future cybersecurity standards and as privacy expectations harden via privacy regulation trends.

2. The top AI tool innovations that will dominate SOCs by 2030

From 2026 to 2030, “AI security” will split into two categories. Category one is marketing: chat interfaces on top of old telemetry. Category two is operational: systems that reduce time to containment and prove the chain of events with defensible evidence. You want category two, especially as audit expectations shift in future audit innovations and as regulatory scrutiny escalates in future compliance predictions.

AI copilots that do not hallucinate: proof-first investigation assistance

The best copilots will not be “smart.” They will be disciplined. They will produce investigation summaries that are anchored to raw telemetry, with clickable proof, and they will refuse to guess. If a copilot cannot show you where each claim comes from, it becomes a liability the second you face a breach review. This is why compliance and evidence maturity are converging with SOC design, similar to the direction implied by NIST framework adoption and the push toward measurable controls in compliance trends.

Identity-first security tooling becomes non-negotiable

AI-powered attackers will lean into identity abuse because it is efficient and looks normal. The winning tools will merge identity signals with endpoint evidence, then automate reversible containment. If your EDR cannot understand session context, you will keep chasing “weird endpoint behavior” while the attacker moves using valid tokens. This is exactly why endpoint evolution matters, and why the trajectory described in endpoint security advances by 2027 must be tied directly to detection architecture described in next-gen SIEM predictions.

Continuous exposure management replaces annual vulnerability theater

Teams will stop treating vulnerability management as a backlog and start treating it as a risk pipeline. AI will prioritize exposures that are exploitable in your environment, not just “critical on paper.” That matters because attackers increasingly compress timelines, which is reflected in incident patterns across the ransomware analysis and the broader data breach report.

The pain point here is brutal. Most orgs patch based on fear, not exploitability. That leads to wasted cycles and unpatched real risk. AI-driven exploitability scoring will win, but only if it is explainable and connected to business criticality, which is increasingly demanded by frameworks and oversight trends described in future cybersecurity standards.

3. How to evaluate AI security tools without getting trapped by demos

Most AI security demos are optimized like sales funnels. They show a perfect incident, perfect logs, perfect correlation, and a perfect answer. Real environments are messy. Logs are missing. Names do not match. Agents fail. People use personal devices. If the vendor cannot survive mess, it will not survive attackers. Use these evaluation principles grounded in operational reality from state of endpoint security effectiveness and the scaling pressures described in the global cybersecurity market outlook.

1) Demand replay testing, not demo theater

Your best evaluation method is to replay real incidents or red-team simulations into the tool and see if it produces the same conclusions your best analysts reached, faster. If the tool “gets it right” only with perfect data, it is not AI. It is fragile automation. Tie this to the evidence discipline expected in future audit practices.

2) Measure actionability, not alert volume

Ask one question: how many alerts lead to safe containment actions. If the answer is low, the tool is adding cost, not reducing risk. This is how you stop “AI SIEM” from becoming expensive log storage, a trap highlighted indirectly by modernization themes in next-gen SIEM evolution.

3) Force the vendor to show sources and failure modes

The AI must show where it got the evidence. It must also tell you when it is uncertain. If it cannot do that, it will create false confidence, and false confidence is how breaches expand. This matters even more as privacy rules evolve, because mistakes become reportable events faster under trends discussed in privacy regulation predictions and GDPR evolution.

4. The tool stack that will win in 2026–2030: how innovations connect end to end

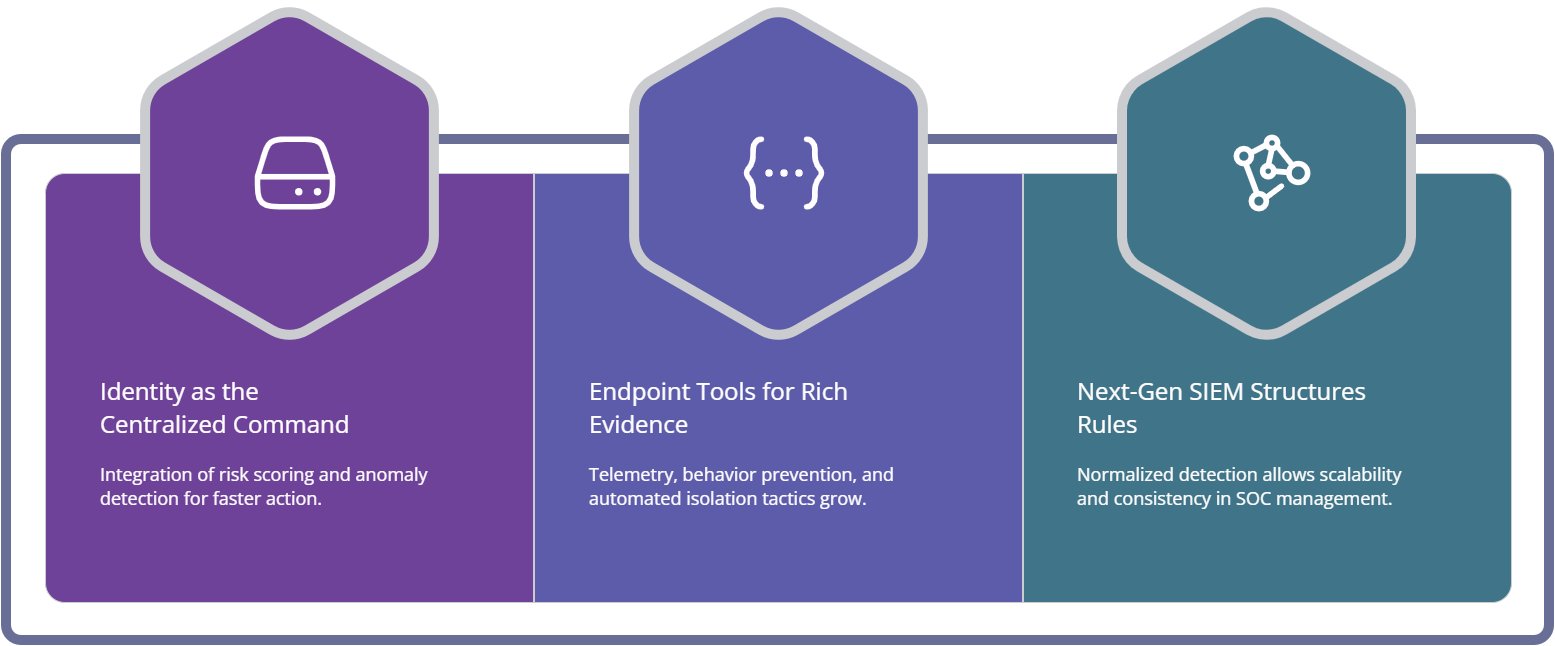

Most organizations buy point solutions and then wonder why they cannot investigate quickly. The winning stack from 2026–2030 will look like a connected system: identity context, endpoint evidence, normalized semantics in the SIEM layer, and automated containment with guardrails. This is not optional if you are dealing with growing sector risk, whether you are in finance cybersecurity trends, healthcare threat predictions, or government/public sector forecasting.

Identity and access becomes the command center

You will see tighter integration between identity risk scoring, session token anomaly detection, and endpoint containment. The key innovation is that identity signals will trigger endpoint actions without waiting for manual confirmation, but only for reversible actions. This is where “AI automation” stops being a buzzword and becomes a measurable decrease in time to contain, a pressure reinforced by real-world incident patterns found in the ransomware report.

Next-gen SIEM becomes semantics, not storage

The SIEM of 2030 is not a log bucket. It is a normalized detection substrate that makes rules portable and evidence consistent. This aligns with the direction in next-gen SIEM predictions and the broader standards maturity implied in future cybersecurity standards.

If you cannot normalize identity, endpoint, and cloud events into a consistent model, you cannot scale detections. You will keep reinventing rules for each data source, then your SOC becomes a fragile craft. AI will help generate detections, but only if your logging foundation is mature enough to support it, which is why baseline evidence discipline matters, similar to what is discussed in predictive audit innovations.

Endpoint tools evolve into containment engines

The endpoint is where attackers execute, stage, and persist. That means endpoint telemetry will remain the richest evidence layer. Innovations will push EDR toward identity-aware correlation, memory-level behavioral prevention, and automated isolation policies. This is exactly the trajectory covered in endpoint security advances by 2027 and validated through performance discussions in the state of endpoint security report.

5. Building defenses around AI tools: the playbook that stops “AI noise”

AI-driven tools can either accelerate protection or accelerate confusion. Your outcome depends on how you implement them. The most effective teams treat AI tools as a controlled system: guardrails, measurements, and repeatable operations. This is where talent development matters, and why career pathways like SOC analyst progression, SOC manager advancement, and specialized tracks like ethical hacking become directly connected to tool success.

1) Start with containment standards before automation

If your team cannot agree on what “containment” means, you cannot automate it safely. Define reversible actions, escalation thresholds, and evidence capture requirements. Then automate the reversible actions. This reduces the risk of automation harming production while still improving time to contain, which is the KPI attackers exploit most. Tie that discipline to the governance pressure described in future compliance predictions.

2) Establish AI tool evaluation gates

Do not deploy AI tooling broadly until it passes:

replay tests on real incident data

proof-linking requirements for every summary claim

measurable reduction in time to contain

measurable reduction in repeat incidents by root cause

This is how you avoid buying “AI dashboards” that look impressive while your breach risk stays the same. The “proof” requirement matters even more under privacy and regulatory trends, including GDPR evolution and the broader direction of privacy regulation shifts.

3) Close the loop after every incident

The highest-performing security programs treat incidents as engineering feedback. Every incident must produce a control improvement and a detection improvement. That is how you reduce repeat incidents, which is one of the most important metrics for maturity. If you want benchmarking data and patterns, connect your approach to themes in the 2025 breach report and phishing trend analysis.

6. FAQs: AI-driven cybersecurity tools and innovations (2026–2030)

-

Evidence automation. The ability to generate a defensible case file with timelines, impacted assets, and proof links will become a competitive advantage, not just a reporting feature. It reduces investigation time, supports faster containment, and makes audits survivable. This matters more as audit expectations evolve in future audit practices and compliance pressure increases through trends outlined in future compliance predictions.

-

Identity plus endpoint correlation. Most high-impact intrusions increasingly leverage valid credentials or token abuse, so tools that fuse identity context with endpoint activity cut investigation time dramatically and enable safer automation. Align your plan with the future-facing endpoint trajectory in endpoint security advances by 2027 and the monitoring foundation described in next-gen SIEM evolution.

-

Require proof linking. Every summary sentence must reference a concrete source event, log, or artifact. Also require uncertainty display, so the tool says “unknown” instead of guessing. Treat hallucination as a severity-one defect, because it creates false confidence. This discipline aligns directly with audit expectations in future cybersecurity audit practices and regulatory realities in cybersecurity compliance trends.

-

They are layered on top of broken data. If logs are missing, identity telemetry is incomplete, or endpoint coverage is inconsistent, the AI becomes a noise multiplier. Fix coverage and normalization first, then deploy AI. Use baseline benchmarking from state of endpoint security effectiveness and align modernization plans with next-gen SIEM predictions.

-

It will absorb many functions, but it will not eliminate specialized controls like endpoint prevention or identity governance. The SIEM becomes the semantic layer that connects tools, normalizes events, and supports evidence-grade investigations. The real question is whether it reduces time to containment and makes detections portable across data sources. This is the direction implied by next-gen SIEM evolution and the evidence-based trend toward next-generation standards.

-

Train for systems thinking, not button clicking. Analysts need competence in detection engineering, identity workflows, automation guardrails, and incident command. The best tools still require people who can design operations and validate outcomes. Use ACSMI’s roadmap on future cybersecurity skills and the reality check in automation and workforce predictions. Then build role pathways using the SOC analyst guide and leadership progression in SOC manager advancement.

-

Choose tools that produce defensible evidence, support continuous control monitoring, and reduce time to contain without risky automation. Regulated environments will increasingly be judged on proof, not intentions, especially under evolving privacy expectations in privacy regulation trends and the continuing evolution of frameworks like GDPR 2.0. Build your selection criteria to satisfy auditors, not just SOC preferences.