Top IoT Security Companies Directory & Reviews (2026-2027 Update)

IoT is where security programs go to die—because it multiplies everything you already struggle with: asset visibility gaps, weak identity, long patch cycles, vendor sprawl, and “it can’t go down” operational pressure. One unmanaged camera, one forgotten gateway, one “temporary” cellular router can become the foothold that bypasses your shiny perimeter tools and lands straight inside production networks. This 2026–2027 directory is built to help you pick IoT security vendors like a grown-up buyer: what they’re best for, where they fail, and the questions that force real outcomes—not dashboards that look nice while botnets keep scaling and DoS pressure keeps rising.

1. 2026–2027 IoT Security Reality: What Changed and Why Vendor Choice Matters More Now

In 2026–2022027 buying decisions, “IoT security” can’t mean “agent on endpoints,” because most IoT can’t run one, and most OT will never tolerate it. You’re buying a capability set: discovery, classification, segmentation, detection, response, and governance—and you’re buying it for environments where outages are career-ending.

Three forces are tightening the screws:

Regulation is moving from “guidance” to “obligation.” The EU Cyber Resilience Act entered into force on December 10, 2024, with reporting obligations applying from September 11, 2026 and the main obligations applying from December 11, 2027.

That matters because IoT and “products with digital elements” are precisely where organizations get caught by weak secure development, poor vulnerability handling, and missing evidence.Consumer IoT labeling is evolving, but it’s not stable. The FCC’s U.S. Cyber Trust Mark program exists as a framework, yet recent reporting shows turbulence around administration and implementation.

Translation: you can’t rely on labels alone. You still need your own validation model and a vendor that can help you prove controls, not just claim them.The threat model keeps shifting toward identity + supply chain + automation. If you’re treating IoT as “network devices,” you’re missing how attackers chain weak IoT auth + exposed services + lateral movement into ransomware outcomes—exactly the pattern behind modern ransomware response and recovery and the forward curve in AI-powered attacks.

So, when you evaluate IoT security companies, you should map them to your environment type:

Enterprise IoT / IT+IoT (cameras, printers, badge readers, smart facilities)

Healthcare IoT (clinical devices + “always-on” requirements)

OT/ICS (manufacturing, energy, utilities, critical infrastructure)

IoT product manufacturers (secure-by-design, SBOM, vuln disclosure, compliance evidence)

And you should anchor your evaluation to fundamentals you already use elsewhere:

Discovery + policy enforcement ties to access control models

Monitoring and alert quality ties to SIEM basics

Detection posture ties to IDS deployment

Governance and audit survivability ties to security audits best practices

The firms below are organized so you can pick fast, avoid expensive mistakes, and build a program that doesn’t collapse the moment a “critical” device can’t be patched for 18 months.

2. Top IoT Security Companies (2026–2027 Reviews): Who They’re Best For and How to Buy Them Without Regret

A directory is useless if it doesn’t tell you fit. Below are 25 reviews that focus on the buyer question: “Will this reduce my risk in a way my team can actually sustain?” Keep your evaluation rooted in practical controls like firewall configurations, operational monitoring via SIEM, and the reality that many IoT compromises scale into botnet disruption and DoS mitigation.

1) Armis

Best for: Organizations drowning in unknown devices across IT/IoT/OT.

Armis tends to shine when your first problem is embarrassing but common: you can’t protect what you can’t even name. The real value is not “a prettier inventory”—it’s risk context that leads to action: which devices are exposed, which are high-risk, which connect to sensitive segments, and which represent unacceptable drift. Tie evaluation back to the control story: how will this feed segmentation policy in your firewall layer, and how will alerts flow into your SIEM workflows?

Buyer trap: treating visibility as the end. Visibility must end in enforcement.

2) Claroty

Best for: OT/ICS-heavy environments where uptime and safety dominate.

Claroty fits when you’re dealing with industrial networks where “patch it” is not a real sentence. Your buying test should be: can they map real industrial protocols, give you safe detection, and help you prove segmentation without breaking operations? Your SOC must be able to interpret what it sees; otherwise, alerts become noise (revisit IDS deployment and SIEM logic).

Buyer trap: over-collecting data without triage logic.

3) Nozomi Networks

Best for: OT monitoring with strong anomaly detection needs.

Nozomi is often shortlisted for deep OT visibility and detection, especially in high-consequence environments. Evaluate around detection quality: can you map detections to actual attacker paths, and can your team validate them without a PhD? This is where threat context matters—pair it with CTI collection so detections aren’t blind.

Buyer trap: assuming anomalies equal attacks; you need operational baselines.

4) Forescout

Best for: Enforcing policy on unmanaged devices (control, not just discovery).

Forescout becomes valuable when your pain point is enforcement: NAC-style control, segmentation triggers, and automated containment that doesn’t require agents. The buying test is operational safety: can it quarantine without taking down clinical devices or manufacturing lines? If you can’t answer that, you’re buying a risk generator. Align to access control models and the reality that weak identity + shared creds will ruin everything.

Buyer trap: buying enforcement without change-management discipline.

5) Microsoft Defender for IoT

Best for: Microsoft-heavy shops that want IoT/OT alerts inside existing security operations.

If your SOC already lives in Microsoft tooling, Defender for IoT can reduce tool sprawl. Evaluate it like you’d evaluate any detection source: logging coverage, alert fidelity, and correlation into IR execution. IoT detection that doesn’t translate into incident response actions is decorative.

Buyer trap: “integration” marketed as “security.”

6) Palo Alto Networks IoT/OT Security

Best for: Segmentation and policy enforcement when the network is your control plane.

If you’re solving IoT risk through segmentation, your enforcement layer is often firewalls. So your buying question is brutally simple: can it help you define and enforce allowed flows, then validate you didn’t accidentally create a shadow pathway? Use firewall types and configurations as your baseline language.

Buyer trap: assuming segmentation is one-time; it’s ongoing hygiene.

7) Cisco (IoT / Industrial Security approaches)

Best for: Large estates where network architecture is the leverage point.

Cisco fits when you already have the ecosystem and want to extend network visibility and control. But you must pressure-test unmanaged device workflows and how identity maps to network policy. Pair evaluation with VPN limitations because “remote access” is where IoT/OT often gets quietly exposed.

Buyer trap: architecture complexity that only Cisco experts can maintain.

8) Fortinet

Best for: Unified network security and segmentation across sites.

Fortinet can be compelling where consistent security policy across branches and factories matters. Your buyer focus: policy clarity, change control, and evidence that segmentation reduces attacker movement. If you can’t demonstrate that in audits, you’re vulnerable both to attackers and governance failure (ground in audit best practices).

Buyer trap: over-reliance on a “fabric” without operational readiness.

9) Zscaler

Best for: Zero trust access for admins and third parties.

Many IoT disasters begin with remote admin access that nobody controls well. Zscaler can reduce lateral movement when implemented with discipline, but you still need identity maturity, privileged control, and solid incident playbooks (tie to IRP development).

Buyer trap: thinking “zero trust access” fixes weak device security.

10) Cloudflare

Best for: Secure connectivity and edge enforcement patterns.

Cloudflare can be part of a strategy where you tighten tunnels, reduce exposure, and enforce controls at the edge. Evaluate certificate hygiene and key handling using PKI fundamentals and encryption standards.

Buyer trap: building connectivity fast and forgetting governance.

11) Akamai

Best for: Defending IoT-facing services from DDoS and edge abuse.

If your IoT devices talk to your cloud services, your risk is not only device compromise—it’s abuse at scale. Akamai’s strength often sits in edge resilience and DDoS mitigation, which aligns directly with DoS prevention.

Buyer trap: protecting the edge while ignoring device onboarding weaknesses.

12) Trend Micro (IoT/OT coverage varies by environment)

Best for: Broad threat coverage in mixed IT/OT programs.

Trend Micro often shows up where organizations want broad threat detection plus operational integration. Your buyer task is clarity: exactly which environments are supported, how telemetry is normalized into the SOC, and how response is executed. Anchor in SIEM workflows and CTI so it doesn’t become noise.

Buyer trap: “coverage” marketed without measurable efficacy.

13) CrowdStrike (Services + platform components)

Best for: Incident response and rapid containment playbooks.

IoT/OT incidents go sideways fast because teams are unpracticed. CrowdStrike’s value tends to be response muscle. Evaluate like a crisis buyer: first 72 hours, containment methods, and how they prevent repeat breaches—especially ransomware scenarios (tie to ransomware recovery).

Buyer trap: paying for IR after you should’ve funded readiness.

14) Palo Alto Unit 42

Best for: Threat-led response and post-incident hardening.

Unit 42 is often pulled in when incidents get real and you need investigators, not consultants. Evaluate their ability to translate findings into control changes: segmentation updates, credential resets, logging coverage, and improved detection logic. Anchor to incident response execution.

Buyer trap: treating IR outputs as a report, not a control backlog.

15) Mandiant

Best for: Adversary-focused investigations and readiness.

Mandiant’s differentiator is often adversary tradecraft and investigative rigor, which is critical when IoT compromise is only the first step. Evaluate their telemetry requirements (logs, flows, identity), how they integrate with your SOC, and how they improve detection engineering. Ground your SOC plan in SIEM basics.

Buyer trap: assuming “post-breach hardening” fixes fundamental inventory gaps.

16) Wiz

Best for: IoT cloud backends—visibility into the real data and permissions.

Many IoT breaches are cloud breaches with a device-shaped entry point. Wiz is relevant when your IoT platform runs on cloud-native services with complex identity, storage, and network paths. Pair evaluation with the future direction in cloud security trends.

Buyer trap: securing cloud posture while ignoring device onboarding and key hygiene.

17) Orca Security

Best for: Cloud risk prioritization for IoT workloads.

Orca fits in a similar space: seeing cloud risk and prioritizing remediation. Your buyer focus is time-to-fix and proof of control improvement—don’t buy cloud dashboards that don’t change engineering behavior. Connect this back to governance requirements and audit evidence (see audit best practices).

Buyer trap: perfect findings lists with no remediation machinery.

18) Prisma Cloud

Best for: Organizations that want CNAPP breadth for IoT backends and pipelines.

If your IoT product is software-heavy, your exposure includes CI/CD credentials, container supply chain, and misconfigurations. Buy with a supply-chain mindset and tie it to the “future tools” wave in AI-driven cybersecurity tooling.

Buyer trap: enabling everything and overwhelming teams with alerts.

19) Snyk

Best for: IoT manufacturers that must govern open source, SBOM, and dev security.

Snyk is relevant when you ship software and need developer-friendly controls that actually get adopted. Your buyer test: can you prove SBOM coverage, fix SLA adherence, and vulnerability closure in a way that satisfies evolving compliance expectations (CRA timelines matter here).

Buyer trap: security tooling that dev teams bypass because it slows releases.

20) Synopsys (Black Duck)

Best for: Mature product security programs and supply chain governance.

If you’re operating a real product security function, you’ll value consistency and evidence: SBOM governance, disclosure workflows, and measurable reduction in known-vulnerable components. Tie governance to security audits so evidence survives scrutiny.

Buyer trap: treating SBOM as a PDF instead of a living control.

21) Splunk

Best for: Centralizing IoT/OT telemetry into investigations and response workflows.

Splunk is not “IoT security” by itself, but it becomes the operational brain when you normalize telemetry and build detection logic. Your buyer focus: data onboarding, correlation rules, noise control, and response automation, grounded in SIEM fundamentals.

Buyer trap: ingesting everything and going broke on data volume.

22) Microsoft Sentinel

Best for: Microsoft-centric SIEM with automation and integration.

If Sentinel is your SOC anchor, evaluate IoT vendors by how cleanly they feed into Sentinel: normalized alerts, context fields, and playbook triggers that support IR execution.

Buyer trap: treating SIEM onboarding as “done” without tuning.

23) Palo Alto Cortex (SIEM/XSIAM ecosystem depending on deployment)

Best for: Detection + automation in environments that want consolidation.

The buyer test is painful but necessary: false positive rate, triage speed, and how quickly it improves your decision-making. If “automation” doesn’t shorten time-to-containment, it’s marketing. Tie it back to SIEM overview.

Buyer trap: automating bad detections faster.

24) Tenable

Best for: Exposure management discipline where vuln backlog is killing you.

IoT vulnerability management fails because patching isn’t feasible and ownership is unclear. Tenable can support prioritization and reporting, but you must build a strategy for “unpatchable” controls: segmentation, monitoring, compensating controls. Ground it in vulnerability assessment techniques and enforcement via firewalls.

Buyer trap: creating a backlog you can’t possibly burn down.

25) Rapid7

Best for: Risk-based remediation workflows and practical exposure reduction.

Rapid7 fits teams that need help turning findings into actions, not just identifying problems. The buyer question: can they help you shorten remediation cycle time, and can they connect findings to real attacker paths (pair with CTI)?

Buyer trap: using scanners as a replacement for risk ownership.

Important 2026–2027 note: independent review sites and market pages often rank tools differently (and sometimes reflect user mindshare rather than objective fit). Treat them as signals, not truth.

3. Buyer’s Rubric: The 12 Questions That Separate Real IoT Security From “Inventory Theater”

If you want a shortlist that survives reality, force every vendor into the same rubric. This is how you prevent the classic pain: “We bought visibility, then still got breached.”

Discovery accuracy: Can you prove coverage across VLANs, sites, wireless, and remote segments—without agents?

Classification quality: Does it identify device type, model, OS/firmware, and behavior with confidence?

Identity mapping: How do devices authenticate? Shared creds? Certificates? Keys? Tie to PKI and encryption standards.

Segmentation readiness: Can it recommend and enforce “allowed flows” using firewall policy?

Unpatchable strategy: What’s the control model when patching is impossible? (This is where programs collapse.)

Detection fidelity: What detections map to real attacker behaviors, not generic anomalies? Pair with IDS.

SOC integration: Can your SOC consume this in your SIEM without drowning?

Response actions: Can you contain safely? Quarantine? ACL updates? Credential rotation?

Evidence + audits: What proof artifacts exist for security audits?

Threat intel: How does it use CTI to prioritize?

Resilience: How does it reduce ransomware blast radius (tie to ransomware response)?

Operational ownership: Who runs it day-to-day, and what does “done” look like?

If a vendor can’t answer these cleanly, you’re about to buy complexity—not security.

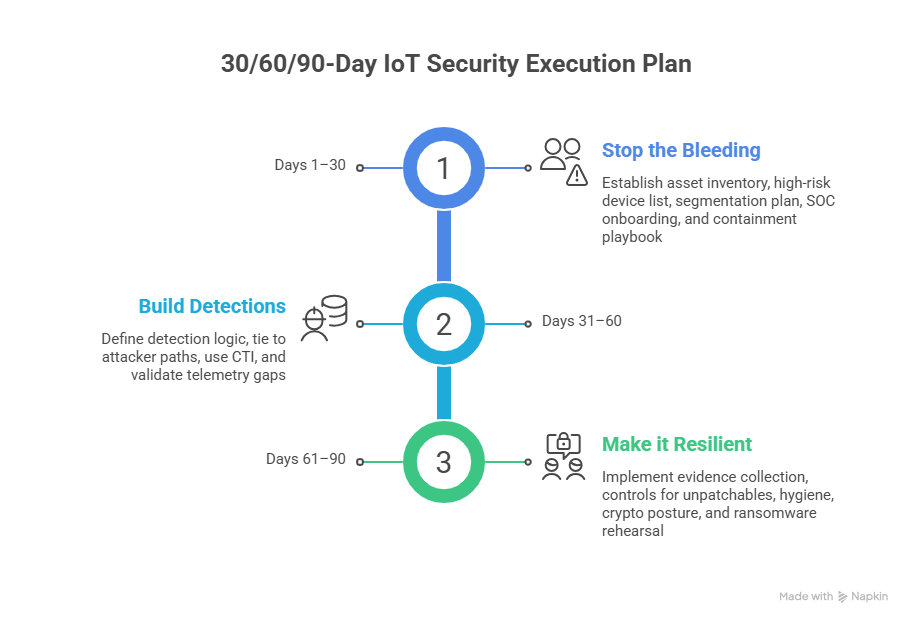

4. Build a Real IoT Security Program (Not a Tool Pile): The 30/60/90-Day Execution Plan

This is where most teams fail: they buy a platform, install sensors, and then… nothing changes. The unowned backlog grows, segmentation stays “later,” and the next incident proves you purchased visibility, not risk reduction.

Days 1–30: Stop the bleeding (visibility → control)

Deliverables that actually matter:

A verified asset inventory with ownership tags (who is accountable for each device class)

A “high-risk device” list with clear criteria (internet exposure, weak auth, EOL firmware, sensitive segment proximity)

A segmentation-first plan grounded in firewall configurations

SOC onboarding plan: what feeds your SIEM, what gets filtered, and what triggers escalation

A containment playbook aligned to incident response execution

Non-negotiable outcome: you reduce the number of “unknown, unmanaged, internet-touching” devices. If that number doesn’t drop, you didn’t implement security—you documented insecurity.

Days 31–60: Build detections that map to attacker paths

Your IoT signals must become investigations, not notifications.

Define detection logic around actual abuse: credential guessing, unexpected protocol use, lateral scanning, unusual egress, command channels

Tie detections to known-scale behaviors like botnet coordination and DoS patterns

Use CTI to prioritize: not every device event is worth human time

Validate telemetry gaps with IDS deployment

Non-negotiable outcome: reduced false positives + faster time to confident triage.

Days 61–90: Make it resilient and auditable

Now you make it survivable:

Evidence collection aligned to security audits

Controls for unpatchables: segmentation + monitoring + credential governance + compensating controls

Key hygiene and crypto posture anchored to encryption standards and PKI governance

Ransomware containment and recovery rehearsal aligned to ransomware detection/response/recovery

Non-negotiable outcome: you can prove controls exist, operate, and are tested—without relying on “trust us” statements.

5. 2026–2027 Buying Traps (That Make Good Teams Look Incompetent)

Trap 1: “We’ll patch later.”

IoT patching is a fantasy in many environments. If your strategy depends on patching everything, you have no strategy. Build unpatchable controls through segmentation, logging, and credential governance—then enforce them with firewalls and validate with IDS.

Trap 2: “We bought an IoT platform, so we’re secure.”

Platforms don’t fix ownership. Security improves only when someone owns:

device onboarding standards

credential rotation

allowed-flow policy

incident playbooks (IRP execution)

Trap 3: “We’ll send everything to the SIEM.”

That’s how you create a SOC that hates you. Send what you can act on and tune it like a product. Use your SIEM overview as a baseline and measure false positives relentlessly.

Trap 4: “Compliance will force security.”

Compliance can force documentation, not outcomes. Regulatory pressure is real (CRA timelines are approaching), but your program must still reduce attacker pathways and prove control effectiveness.

Trap 5: “Labels mean safe devices.”

Labeling programs can help, but implementation and governance can change. Use labels as a signal, not a substitute for your own validation and continuous monitoring.

6. FAQs: High-Value IoT Security Questions Buyers Should Ask

-

None. The best vendor depends on whether your primary problem is visibility, enforcement, OT detection, cloud backend risk, or product security compliance. Use the rubric in H2 #3 and map it to your environment.

-

Three layers matter most:

Segmentation (limit who can talk to what) via firewall policy

Monitoring + detections (spot abnormal behaviors) via SIEM and IDS

Credential/key governance anchored in PKI and encryption standards

-

Treat IoT as a segmentation + detection problem, not a patch problem. Build strict allowed flows, monitor east-west movement, and rehearse containment with your incident response plan. Then validate recovery readiness using ransomware recovery guidance.

-

Find and eliminate internet-exposed IoT management interfaces and weak remote admin paths. It’s the most common silent risk and it’s how “minor devices” become major incidents. Validate access control assumptions using DAC/MAC/RBAC basics.

-

Track:

% of devices with known owner and classification confidence

% of high-risk devices reduced month over month

segmentation policy coverage and drift rate

detection precision (true/false positive ratio)

time-to-containment from first alert

Tie governance to security audit practices so metrics survive scrutiny.

-

Start now on vulnerability handling, evidence generation, and secure development lifecycle governance—CRA timelines are not far away, especially for reporting obligations.

Practically: SBOM discipline, disclosure processes, and cryptographic key governance that can be proven.