AI-Powered Cyberattacks: Predicting Future Threats & Defenses (2026–2030)

AI is not just helping defenders. It is industrializing attackers. Between 2026 and 2030, the winners will not be the teams with the most tools. They will be the teams with the fastest truth loop: detect what matters, prove what happened, contain with consistency, and harden the exact control that would stop the next variant. If your endpoint, identity, email, and cloud telemetry are still fragmented, AI will turn that gap into speed for the adversary. This guide breaks down the most likely AI-driven threats and the defenses that actually scale.

1) The AI-powered threat landscape from 2026 to 2030: what changes and why it hurts

Attackers are adopting AI the same way high-performing growth teams adopt automation. They measure output, remove friction, and run experiments until conversion improves. That means your pain points become their product roadmap: weak identity controls, slow incident response, and inconsistent detection coverage. If you are still treating phishing as a user problem instead of a system problem, you are already behind. Your workforce skills plan needs to evolve now, not later, because the “human-only” tasks are shrinking fast. Build that foundation using ACSMI’s roadmap on future cybersecurity competencies and align it to the operational reality described in the next-gen standards predictions.

1) AI makes reconnaissance continuous, cheap, and deeply personalized

From 2026 onward, recon becomes a 24/7 pipeline. Models will scrape public data, leaked credentials, employee posts, vendor documentation, and job listings, then convert that into tailored pretexting and targeting. The painful part is not “more recon.” The painful part is recon that adapts faster than your security awareness cycle. If your organization has repeated visibility gaps, attackers will learn them and route around your controls. Use the patterns in ACSMI’s evolution of threats analysis and apply them to your environment, especially if you have distributed endpoints or contractors.

This is why security teams that still rely on static asset inventories get blindsided. If your asset list is stale, AI-driven recon will find the shadow endpoints and the cloud workloads you forgot. That “blind spot” becomes the attacker’s staging platform. Pair your threat planning with the realities in the IoT breaches report and the broader global market outlook to understand where coverage and budget are actually heading.

2) AI makes social engineering harder to spot and easier to scale

The future is not just deepfakes. It is “frictionless persuasion.” AI will generate emails, voice snippets, chat messages, and meeting invites that match your internal language. Your staff will not see obvious red flags. The attacker will test multiple variations until one hits, just like ad optimization. If your detection strategy is still built around known templates, it will miss the novel and the personalized.

Your best defense is engineering-based, not training-based. You need layered email controls, identity verification rituals, and endpoint containment that does not depend on analyst heroics. Ground this in the operational lessons from the phishing trends report and connect it to compliance expectations in the cybersecurity compliance trends report. If you operate in regulated sectors, also map controls against the direction of privacy regulation predictions and the next wave of GDPR evolution.

3) AI accelerates lateral movement and privilege abuse

From 2026 to 2030, attackers will increasingly treat identity as the main battlefield. AI will help them discover role misconfigurations, token reuse opportunities, and privilege escalation paths inside hybrid environments. If your identity telemetry is not integrated into your endpoint investigation flow, you will lose time and lose context. This is not theoretical. “Valid login” abuse is already one of the most damaging patterns across industries.

If you want a practical framing, study how modern security programs are evolving endpoint controls in ACSMI’s analysis of advances in endpoint security by 2027 and combine it with the governance expectations in the future of compliance regulations. When identity is the route, your endpoint is the evidence. Your response model must unify both.

4) AI compresses attacker dwell time and increases “quiet” exfiltration

The biggest misconception is that future AI attacks will be louder. Many will be quieter. AI can help attackers choose the lowest-noise path, time exfiltration to blend into normal traffic, and stage data in places your monitoring ignores. If your organization struggles with alert overload today, AI will turn that into missed signals tomorrow. Your detection engineering must evolve toward high-confidence signals and better triage.

This is where original data matters. Review the 2025 data breach report and the state of ransomware analysis to see what attackers already optimize for. Then project forward: AI makes those workflows faster, cheaper, and more repeatable. If your containment steps are inconsistent, AI-driven campaigns will exploit that inconsistency again and again.

2) How AI will reshape the attack chain: predictions you can plan against

Most security programs fail because they plan for tools, not for attacker workflows. AI changes workflows. It turns every phase of the attack chain into something faster and more adaptive. If you want to predict 2026 to 2030 threats accurately, you must think in terms of feedback loops: attackers try, measure, refine, then repeat. Defenders must do the same.

Recon to initial access: “precision at scale”

AI will sharpen targeting so campaigns waste less time on low-value users. That increases the probability that every message you see is high intent. If you treat alerts as “maybe later,” you create dwell time. Build your detection plan with the mindset of modern SOC maturity, and benchmark your career path and operational discipline using ACSMI’s guidance on SOC analyst development and the leadership expectations in SOC manager progression.

Defensively, reduce the attacker’s decision surface. Lock down identity recovery, harden MFA workflows, monitor unusual token behavior, and set clear escalation gates. Tie this to compliance realities, because regulators will not accept “we trained users” as a control when identity risk is systemic. Use ACSMI’s direction on audit practice evolution and NIST framework adoption to convert your security narrative into evidence.

Execution to persistence: “stealth becomes a product feature”

AI does not just create malware. It creates methods. Attackers will increasingly use legitimate tools, native scripts, and cloud features in sequences that look normal. That makes purely signature-based defenses fragile. You need behavioral controls, hardened baselines, and consistent endpoint response playbooks. If your endpoint security program is still reactive, anchor it to the predictions in endpoint security advances by 2027 and validate your gaps against the state of endpoint security data.

The hard pain point here is investigation time. If evidence is scattered, your team bleeds hours. AI-driven attackers exploit that time gap. Your response must compress it. That means centralizing telemetry, standardizing containment, and turning “tribal knowledge” into playbooks that junior analysts can execute safely. If your response varies by analyst, you will never scale.

Exfiltration and impact: “fast monetization, fast exit”

Between 2026 and 2030, expect more attacks that monetize quickly: extortion, data theft, fraud, and disruption. AI helps attackers prioritize targets that are more likely to pay or more likely to panic. If you operate in finance, healthcare, or public sector environments, the blast radius is higher, and your defenses must be more proactive. Use sector insights from ACSMI’s predictions in finance cybersecurity, healthcare cybersecurity, and government/public sector risks.

Your defensive priority is containment speed and data control. If you cannot reliably see staging, encryption prep, or unusual compression, you will find out too late. Map these risks to a measurable program: egress allowlists, immutable backups, privileged access hardening, and endpoint isolation that works even when identity is compromised.

3) AI vs AI: defenses that scale without burning out your SOC

A lot of teams will buy “AI security” and still lose. Why? Because their fundamentals are weak. AI amplifies whatever you already are. If your telemetry is messy, AI produces confident noise. If your playbooks are inconsistent, AI makes inconsistency faster. The correct approach is to make AI an accelerant for a disciplined system.

1) Build a high-signal telemetry spine

Your biggest enemy is not a lack of data. It is data that cannot be trusted or correlated quickly. A scalable defense requires consistent endpoint logging, identity context, and a clear chain of custody for evidence. If you are planning SIEM modernization, align it with the forward-looking direction in next-gen SIEM predictions and the expectations of future cybersecurity standards.

Design your telemetry so each alert answers these questions immediately:

Who is the actor, and is the identity trusted?

What endpoint or workload is affected?

What changed, and what was the sequence?

What evidence supports malicious intent?

What containment action is safe right now?

If your analysts still “hunt for context,” you are losing time. AI attackers exploit time.

2) Reduce alert overload by focusing on containment-grade signals

The goal is not detection. The goal is decisions you can act on. When AI makes attacks more adaptive, your detections must become more resilient. That means building detections around behaviors that are hard to fake: abnormal token use, unusual privilege grants, suspicious process trees, persistence artifacts, and unexpected lateral movement patterns.

Tie your detection engineering to the operational reality of AI adoption in cybersecurity and the market direction in the global cybersecurity report. This keeps your program aligned with what is actually changing in the field, not what vendors promise.

3) Make response consistent with automation plus guardrails

The mistake is automating everything. The right move is automating the safe 80 percent and building guardrails around high-risk actions. Containment should not depend on who is on shift. If one analyst isolates a host and another waits two hours, your “program” is not a program. It is luck.

Build runbooks for:

credential reset and token revocation

endpoint isolation

high-risk process termination

suspicious account disablement

backup protection escalation

evidence preservation steps

Then measure them. If you do not track consistency, you cannot improve it. Use the structured thinking in ACSMI’s audit innovation analysis because the future will demand proof, not stories.

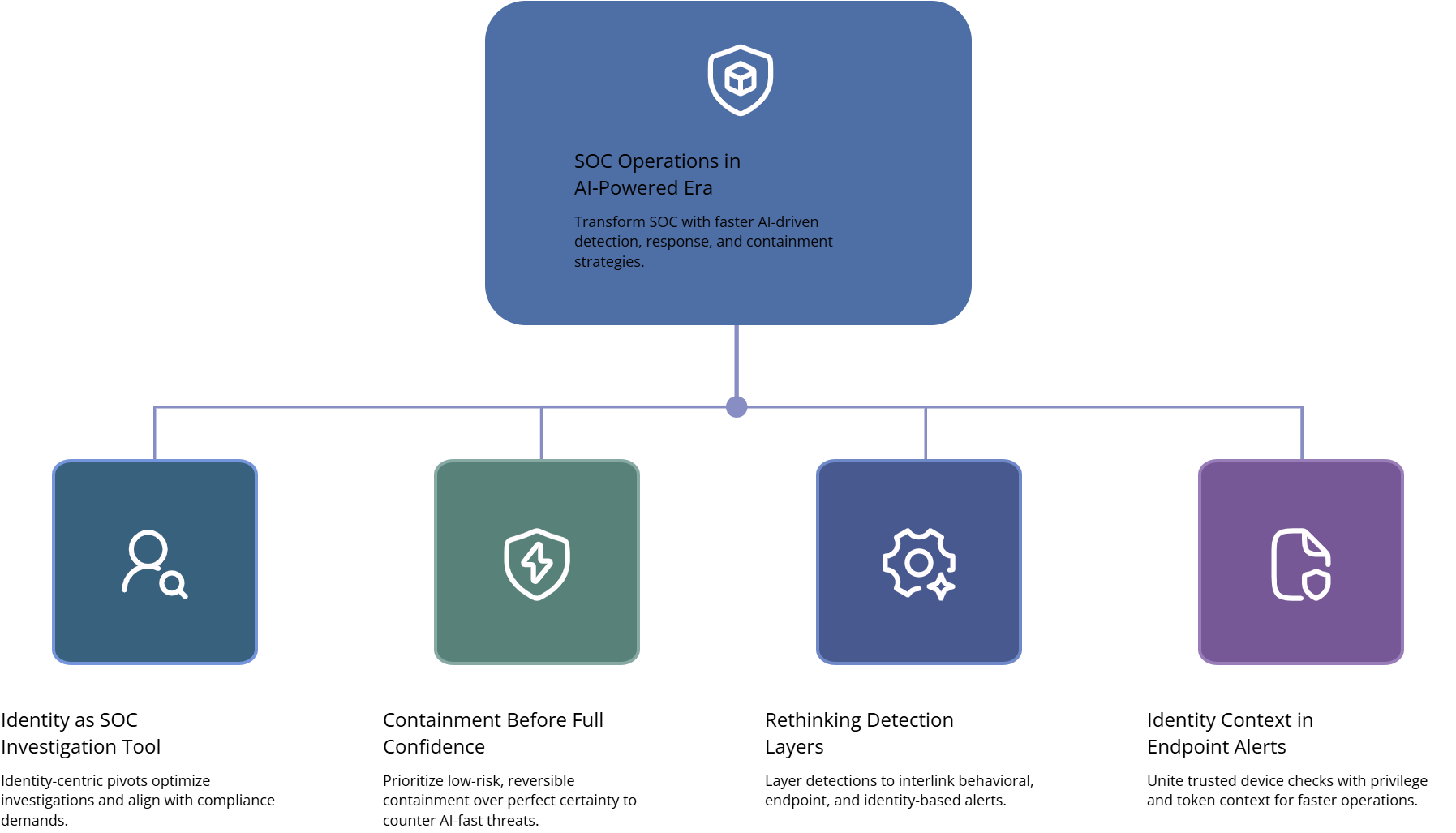

4) SOC execution in the AI era: detection engineering, investigations, and containment that work

AI-driven threats punish teams that rely on manual correlation. If your incident response is still “collect logs, ask questions, then decide,” you are too slow. Your SOC needs a new operating model that treats speed and consistency as first-class outcomes. This is exactly why career development matters, because the SOC of 2026 is a different job. Anchor training and role design to ACSMI’s guidance on specialized cybersecurity roles and the reality check in automation and workforce predictions.

1) Treat identity as the primary investigation pivot

When “valid login” becomes the most common path, endpoint alerts without identity context are incomplete. Build investigations that pivot from identity to endpoint and back again. For every suspicious login, the SOC should instantly answer: was the device trusted, was the session token normal, did privilege change, and did the endpoint behavior match the role? If you cannot answer in minutes, your process is broken.

This is also where compliance pressure increases. Regulators care about access governance and auditability. Align controls to the direction in cybersecurity legislation impacts for SMBs and the broader compliance future outlook. Even if you are not regulated, your customers are.

2) Build “containment first” playbooks

If you wait for perfect certainty, you lose. AI-driven attackers move fast. Your playbooks should prioritize reversible, low-risk containment actions: isolate host, revoke token, disable account, block destination, preserve evidence. Then investigate deeper.

Use the operational discipline described in the ransomware threat analysis and translate it into containment SLAs. If your average time to isolate is longer than your average time to spread, you will always be reactive.

3) Validate detection logic against adversarial behavior

Expect attackers to probe your detections. If you rely on a single model score or a single rule, attackers will tune around it. Build layered detections: one behavioral alert triggers an identity check, which triggers an endpoint verification, which triggers containment if the evidence matches. You are building a system, not a rule library.

If you are modernizing logging and monitoring, align it with the future direction in next-gen SIEM technologies and the evidence expectations in audit practice innovations. If your SOC cannot prove a claim, it is not defensible.

5) Governance, regulation, and readiness: how to build defenses that survive 2026 to 2030

Tools expire. Governance sticks. Between 2026 and 2030, organizations will be judged by whether they can demonstrate control, accountability, and measurable improvement. AI-driven attacks will increase scrutiny because the “unpredictability” excuse will not hold. Leaders will be expected to show how they reduce risk systematically.

1) Turn AI risk into policy with real enforcement

If your organization uses AI internally, you must address prompt-injection risk, data leakage, and agent behavior. That means defining what data can be used, where it can be stored, and how outputs are validated. Do not write policy that no one can enforce. Write policy tied to technical controls and evidence.

To keep governance aligned with the direction of the industry, map your program against the future-facing insights in AI in cybersecurity adoption and the compliance trajectory in GDPR and cybersecurity best practices. If you operate internationally, also track emerging shifts in global privacy regulation trends.

2) Build a workforce plan that matches the real threat model

From 2026 onward, the workforce challenge is not “we need more people.” It is “we need people who can operate systems.” Your analysts must understand detection engineering, automation guardrails, identity workflows, and incident command. This is why competency planning matters. Use ACSMI’s blueprint on future skills for cybersecurity and pair it with role demand signals in specialized roles predictions.

If you want a practical career lens, connect this to structured pathways like ethical hacking roadmaps and progression tracks such as junior penetration tester to senior consultant. Even if you are not hiring, these frameworks help you build internal capability.

3) Make measurement non-negotiable

AI attacks will expose weak metrics. If your KPIs are vanity, you will not improve. Track:

time to contain (not just time to detect)

percent of alerts that lead to action

repeat incidents by root cause

coverage for critical assets

identity anomalies investigated vs ignored

backup integrity test success

Then force post-incident closure. Every incident must result in a hardened control, not a PDF report. Use industry perspective from the cybersecurity market report to align measurement with executive expectations and budget reality.

6) FAQs: AI-powered cyberattacks and defenses (2026 to 2030)

-

The highest-probability path is identity-driven: AI-personalized phishing leads to credential capture, token reuse, then lateral movement through misconfigured access. Mid-sized organizations often have inconsistent MFA coverage and weaker identity recovery controls, which makes “valid login” abuse extremely effective. The best defense is to harden identity, reduce token risk, and unify endpoint plus identity investigation. Use the control direction in endpoint security advances by 2027 and align it with governance expectations in future compliance trends.

-

AI will replace repetitive tasks, not accountability. Triage, enrichment, and correlation will become more automated, but incident decisions still need humans who understand risk, business impact, and safe containment. The real shift is that analysts will be expected to operate automation and build detections, not just react to alerts. Plan your hiring and training using ACSMI’s analysis of automation in the cybersecurity workforce and the competency roadmap in future cybersecurity skills.

-

The failures are structural: weak DMARC enforcement, inconsistent MFA, permissive password reset flows, and approvals that rely on informal trust. Training helps, but it cannot keep up with AI variation testing. The scalable defense is layered verification and hardened identity workflows. If you want an evidence-based starting point, review the patterns in the phishing trends report and connect them to regulator expectations in the compliance trends report.

-

You reduce alert overload by designing for action. Stop building detections that cannot drive containment. Focus on high-confidence signals: abnormal identity behavior, suspicious privilege changes, rare process chains, persistence artifacts, and unusual outbound destinations. Then build playbooks that convert those signals into consistent actions. If your SIEM and detection strategy is being refreshed, align it with the forward view in next-gen SIEM technologies and the evidence requirements in future audit practices.

-

They assume AI fixes fundamentals. AI does not fix missing telemetry, poor endpoint coverage, or inconsistent response. It amplifies whatever you already do. If your data is messy, AI produces confident noise. If your response is inconsistent, AI produces inconsistent automation. Leaders should prioritize a telemetry spine, standardized playbooks, and measurable containment speed before adding more “smart” layers. Use the evidence from the state of endpoint security report and align strategy with the global market direction.

-

Compliance will shift toward proof of control effectiveness, not just documented policy. Expect more scrutiny around identity governance, audit trails, breach readiness, and data protection, especially as AI increases the speed and scale of attacks. The strongest move is to map security controls to evidence, then continuously test and report on results. Track evolving expectations using future compliance predictions, privacy regulation trends, and the evolving standards view in next-generation cybersecurity standards.

-

Pick three outcomes and measure them weekly: endpoint coverage for critical assets, time to contain, and repeat incidents by root cause. Then build playbooks that make containment consistent. Add identity hardening that reduces token abuse and tightens recovery. Finally, validate backups and test recovery like it is a production system, not a checkbox. If you want a threat-driven lens, connect this to the lessons in the ransomware analysis and operational maturity guidance from the SOC analyst pathway.